We collaborate with the Association for Computing Machinery (ACM), the Raspberry Pi foundation, and ML&systems conferences to share digital artifacts along with published papers in the form of portable CK workflows, automation actions, and reusable components. The goal is to make it easier for researchers and practitioners to reproduce and compare research techniques, build upon them, and participate in collaborative ML&systems benchmarking and optimization.

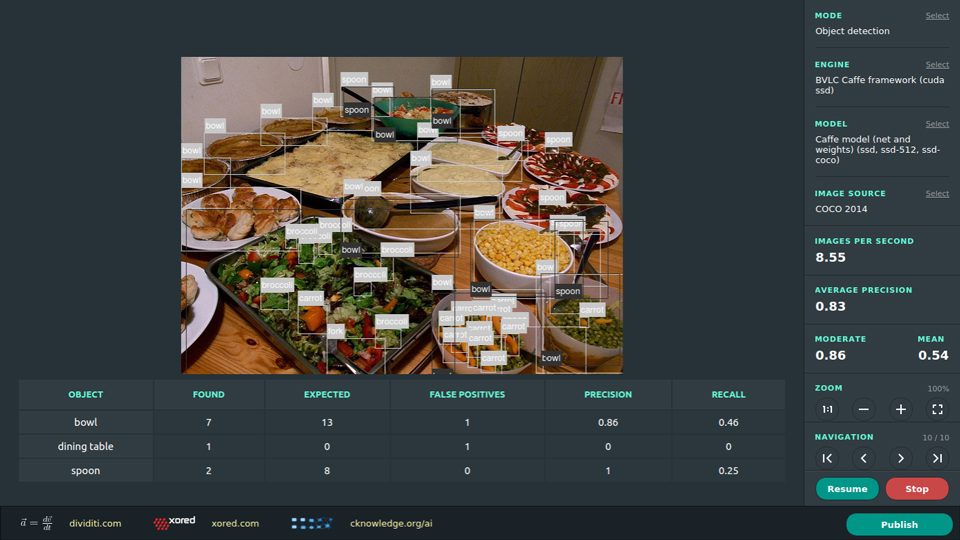

The CK framework powers cKnowledge.io - a prototype of an open platform to automate and simplify development, optimization and deployment of Pareto-efficient ML Systems across continuously changing software and hardware stacks from the cloud to the edge based on user requirements and constraints.

Arm is one of the first and main users of the Collective Knowledge Technology to automate the design of the more efficient computer systems for emerging workloads such as deep learning across the whole SW/HW stack from IoT to HPC. See the HiPEAC info (page 17) and the Arm TechCon'16 demo for more details about Arm and the cTuning foundation using CK to accelerate computer engineering.

We collaborate with colleagues from TomTom on a model-driven approach for a new generation of adaptive libraries, while automating and crowdsourcing experiments and ML-based modeling using the Collective Knowledge framework.

We collaborate with the EcoSystem Lab from the University of Toronto led by Professor Gennady Pekhimenko to develop portable, customizable and reusable AI benchmarks based on the open-source TBD suite and the Collective Knowledge Workflow framework.

The non-profit cTuning foundation regularly helps European and international projects ( MILEPOST, PAMELA) to automate tedious R&D, develop sustainable research software, perform collaborative and reproducible experiments and share results on public scoreboards powered by CK.

We collaborate with the colleagues from the University of Edinburgh and Glasgow use CK to automate and crowdsource optimization of mathematical libraries and compilers.

We collaborate with the colleagues from ENS Paris to automate and crowdsource polyhedral optimization using CK.

We collaborate with the colleagues from Hartree SuperComputing Center to use CK for customizable and sustainable experimental workflows and collaboratively optimize realistic workloads across various HPC systems.

Xored developed several open-source CK extensions since 2016 including DNN engine optimization front-end and the Android app to crowdsource DNN benchmarking.

University of Cambridge colleagues use Collective Knowledge framework to develop sustainable software, accelerate research, automate experimentation and reuse artifacts. For example, portable and reproducible experimental workflow from the "Software Prefetching for Indirect Memory Accesses" article by Sam Ainsworth and Timothy M. Jones received a distinguished artifact award at CGO'17.

dividiti is an engineering company created by Grigori Fursin and Anton Lokhmotov to provide professional services based on the CK framework and help hardware vendors submit MLPerf™ benchmark results.

KRAI is an engineering company that develops open-source CK workflows to automate MLPerf™ submissions and provides optimization services to co-design efficient SW/HW stacks for robotics.

Alastair Donaldson's group uses CK to automate and crowdsource detection of compiler bugs (crowd-fuzzing of traditional, OpenCL and OpenGL compilers). TETRACOM project "CLmith in Collective Knowledge" won HiPEAC technology transfer award in 2016.

The cTuning foundation is a non-profit R&D organization led by Grigori Fursin. It coordinates and sponsors all CK developments and reproducibility initiatives such as Artifact Evaluation!