Grigori Fursin, PhD

I am a British national living permanently in the Greater Paris area. I am the Founder of cTuning Labs (AI Systems R&D), cKnowledge.org and cTuning.org, and a Strategic Advisor at LUMAI. Previously, I was the Founder and Architect of a collaborative AI benchmarking and optimization platform acquired by OctoAI (now Nvidia), where I later served as VP of MLOps. I have also been Co-Director of the Intel Exascale Lab and Head of the AI Systems R&D Lab at FlexAI, as well as a Senior Tenured Research Scientist at INRIA and an Adjunct Professor at the University of Paris-Saclay. I hold a PhD in Self-Optimizing Compilers and Systems from the University of Edinburgh.

Summary

I'm a strategic technologist, computer scientist, full AI/ML stack engineer, business executive, strategic advisor, and open-source advocate with 20+ years of driving deep-tech innovation from research to real-world impact. I'm passionate about creating intelligent, self-optimizing systems that are efficient, cost-effective, and bridge the gap between cutting-edge research, engineering execution, and business outcomes. My career spans roles including CEO, CTO, Head of R&D Labs, VP of MLOps, Chief Architect, Tenured Research Scientist, AI Systems Engineer, and Adjunct Lecturer.

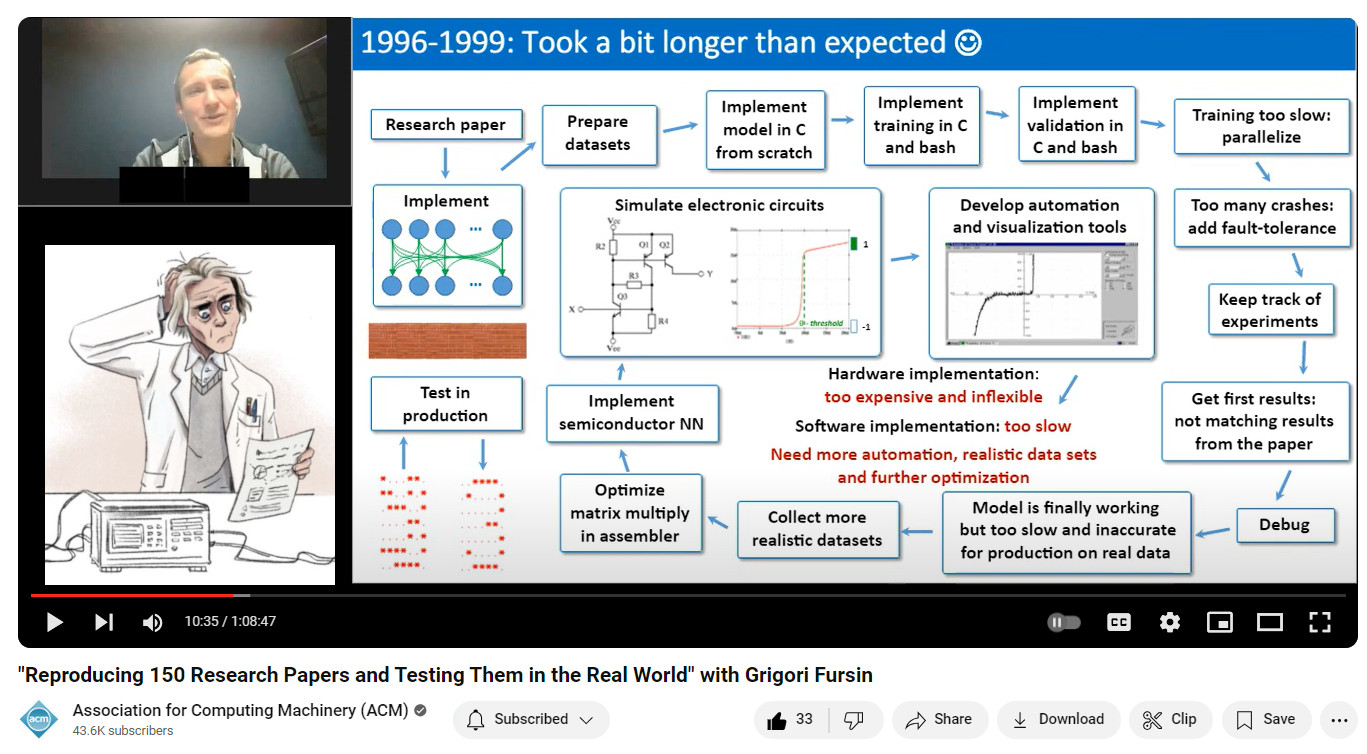

I hold a PhD in self-optimizing systems and compilers, and have led pioneering work in machine learning systems, workflow automation, knowledge management, and reproducible R&D. From designing analog neural hardware in the 1990s to developing one of the first self-optimizing compiler stacks and founding startups acquired by leading AI companies, I've consistently worked at the intersection of innovation and application. I also invented and developed a modular automation framework adopted by MLCommons - a consortium of over 100 AI and systems companies - to test, benchmark and optimize a wide range of AI models and datasets across diverse hardware and software platforms, from cloud to edge.

I founded and continue to develop the cTuning and Collective Knowledge ecosystems to automate knowledge management and optimize modern AI/ML workloads for maximum efficiency and cost-effectiveness, while accelerating innovation and collaborative R&D: https://cKnowledge.org

My key strengths:

- Initiating and leading high-impact, long-term, interdisciplinary deep-tech R&D projects

- Developing automation frameworks and platforms for efficient software/hardware co-design

- Advising C-level teams on technology strategy, innovation, cost optimization, and faster time-to-market

- Mentoring researchers, engineers, and students

I'm open to opportunities in advisory, technical leadership, innovation strategy, and individual contributor roles where I can unite research, engineering, and business teams to deliver breakthrough deep-tech R&D and build ecosystems with lasting real-world impact.

You can learn more about my projects and vision on my Bio and CV page.