Grigori Fursin, PhD

Brief biography:

I am a forward-thinking and agile computer scientist, inventor, full AI/ML stack engineer, strategic advisor to startups, investors, and executive teams, educator, mentor, long-time open source contributor, and passionate advocate for open science. I hold a PhD in self-optimizing compilers and systems from the University of Edinburgh. My interdisciplinary background spans computer engineering (with expertise in co-designing the full hardware-software stack from the cloud to the edge), machine learning, AI systems, data analytics, workflow automation, knowledge management, and physics and electronics. I am passionate about building innovative solutions to real-world problems and about unifying and automating R&D processes to enhance efficiency and reduce costs.

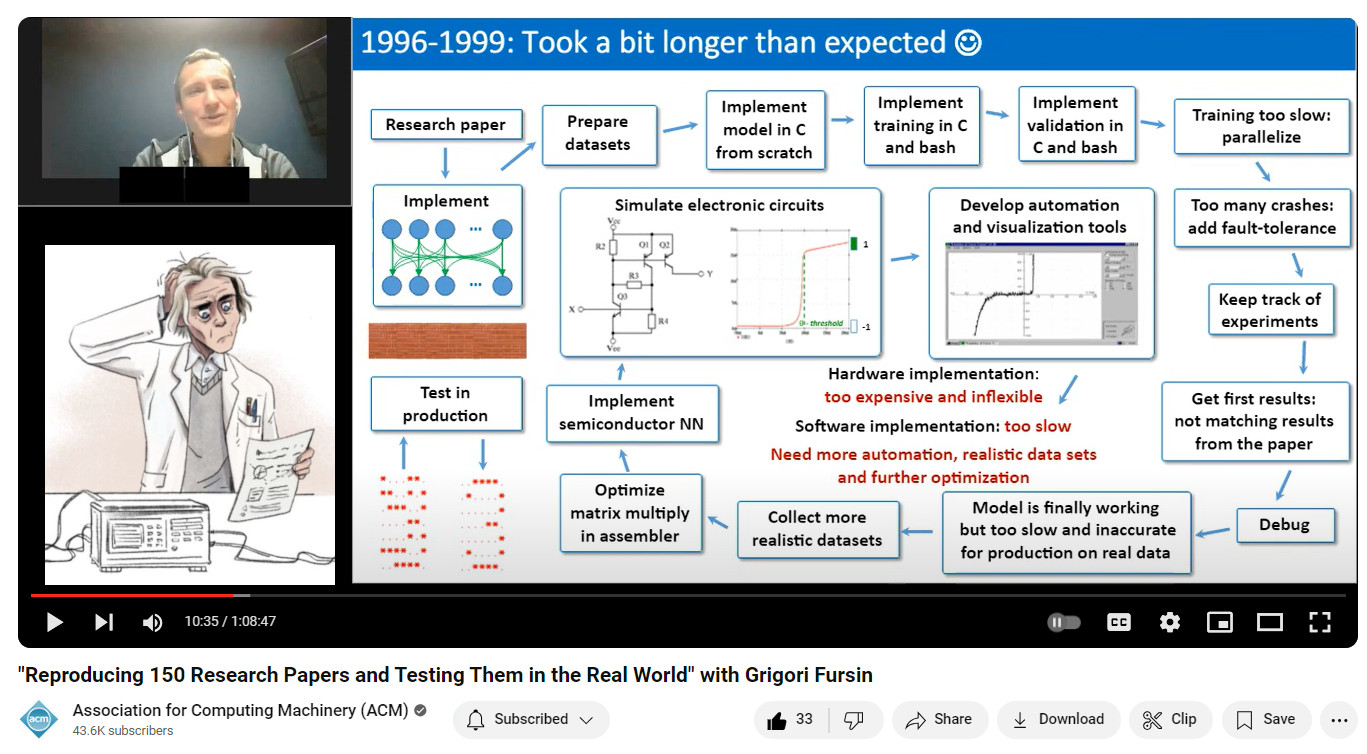

Fascinated by the prospects of AI and robotics, I began my R&D career in the mid-1990s as an undergraduate, taking on a technical leadership role to develop Hopfield-based analog semiconductor neural networks from scratch. This included complete development and automation of software, hardware, models, and datasets for training, inference, electronic simulation, and prototyping—since nothing existed at the time.

This project took much longer than I originally expected and revealed numerous issues in R&D methodologies, tools, and inefficiencies in computer engineering. As a result, I decided to switch to computer science and pursue PhD research to address these challenges. This interdisciplinary foundation and experience enabled me to pioneer and champion visionary uses of machine learning, AI, crowd-tuning, and crowd-learning to co-design more efficient, cost-effective, and scalable computer systems—including compilers, runtimes, software, and hardware—during my PhD at the University of Edinburgh and postdoctoral research at Inria.

I initiated and led R&D efforts that addressed the growing complexity of modern systems and served as a precursor to self-optimizing and agentic systems, AutoML, workflow automation, agent-based optimization, federated learning, reproducible experimentation, and universal, efficient, technology-agnostic compute. It also enabled me to initiate and support open science and reproducibility initiatives starting in 2008, when I launched cTuning.org (followed by cKnowledge.org with my Collective Knowledge Technology aka CK in 2014) and released all my research code, data, models, and experiments for our ML-based self-optimizing compiler—considered the first of its kind (ACM TechTalk'21). I was honored to receive the ACM CGO Test of Time Award, multiple Best Paper Awards, the INRIA Award for Scientific Excellence, and the EU HiPEAC Technology Transfer Award for this research and open-source tools.

After serving as a senior tenured research scientist at INRIA, an adjunct professor at the University of Paris-Saclay, and co-director of the Intel Exascale Lab, I transitioned my research and open-source tools into industry. I first established a non-profit cTuning foundation and co-founded a successful engineering company to automatically benchmark and optimize deep learning across diverse software and hardware stacks, with a focus on mobile phones and edge devices. I helped bootstrap it as CTO and Chief Architect, quickly growing it to $1M+ in revenue with just 4 people, thanks to my CK automation technology. I then joined Entrepreneur First, a highly selective company-building program for scientists and technologists, where I learned to build lean startups and avoid common pitfalls. As a result, I founded and bootstrapped two startups in the fields of performance optimization, MLOps automation, and knowledge management—the latter of which was acquired by OctoAI (now part of NVIDIA).

During that time, I invented the Collective Mind automation language (CM/CMX), which was adopted by MLCommons—a consortium of over 100 AI and systems companies—to test and benchmark a wide range of AI models and datasets across diverse hardware and software platforms, from cloud to edge. My CM technology has automated thousands of MLPerf submissions and enabled the discovery of some of the most performance- and cost-efficient AI solutions using commodity servers, outperforming high-end systems from Nvidia. I am now developing the next generation of this automation.

At the same time, I remained actively involved in community service and open-source initiatives. I helped establish MLCommons and launch reproducibility efforts at ACM and IEEE conferences: cTuning.org/ae . I also introduced a unified artifact appendix, which has since been adopted by major conferences such as ASPLOS, CGO, PPoPP, SuperComputing and MICRO. Finally, I co-organized several successful Quantum Hackathons, including one at Ecole 42 in Paris, where we utilized my CK workflow automation and platform for collaborative benchmarking and optimization of Quantum workloads (Hackathon page and a list of my events).

Throughout my career, I’ve been honored to collaborate with and learn from brilliant minds across leading universities, non-profits, startups, and companies — including Google, Amazon, Meta, Arm, AMD, Intel, IBM, Qualcomm, NVIDIA, Raspberry Pi, OpenAI, Tesla, OctoAI, Neural Magic, Red Hat, Dell, HPE, Lenovo, Apple, INRIA, ACM, IEEE, HiPEAC, MLCommons, and the Linux Foundation: Acknowledgments (1), Acknowledgments (2), and Acknowledgments (3).

My passion lies in applying my knowledge, experience, and tools to accelerate the journey from deep tech research to real-world production—while building intelligent, self-optimizing systems. I regularly support startups, enterprises, universities, non-profits, researchers, students, and investors in rapidly prototyping novel ideas, launching innovative deep-tech projects, reducing time to market, and delivering tangible impact through collaborative, reproducible, interdisciplinary, quantifiable, and automated R&D methodologies.

While I actively prototype full-stack projects and contribute hands-on, I bring the most value in roles such as strategic advisor, technical manager, R&D lab director, educator, research scientist, or senior individual contributor. I focus on bridging research, engineering, and product teams—helping them navigate complex, rapidly evolving technology landscapes, manage project complexity, avoid common pitfalls, and achieve meaningful outcomes efficiently, even with very limited resources and time.

In 2024, I began prototyping the next generation of my Collective Knowledge platform, aimed at solving the complexity of AI systems. My goal is to develop a universal compute engine that simplifies running models on any hardware with any software in the most efficient and cost-effective way while significantly reducing all associated costs. This initiative builds on my existing work, including Collective Mind, virtualized MLOps, MLPerf, Collective Knowledge Playground, and reproducible optimization tournaments. For more details, see my white paper, and feel free to reach out if you would like to learn more. I also joined FlexAI as Head of their R&D Lab, where I am developing FlexBench to track state-of-the-art models like DeepSeek and to benchmark and optimize them across diverse software and hardware stacks. This work is based on the MLPerf methodology and my MLCommons CM workflow automation framework.

In my spare time, I enjoy spending time with my two children, reading, learning new skills, playing soccer (having competed semi-professionally), hiking, traveling, teaching, developing agentic automations and platforms for collaborative and reproducible R&D, and brainstorming future projects.

My key open-source software developments:

- My Collective Knowledge (CK) and Collective Mind (CM) workflow automation technology hosted by MLCommons: github.com/mlcommons/ck, doi.org/10.5281/zenodo.8105338

- Virtual MLOps scripts and MLPerf automations: CMX format, CM format

- Prototype of a next-generation virtual AI platform (universal AI compute): access.cKnowledge.org (new version is under development)

- Open optimization challenges and hackathons I helped organize: View, Leaderboard

- Virtual DevOps and MLops used to automate above challenges: View

- Public results including MLPerf: View

- Prototype of FlexBench to benchmark vLLM across diverse hardware, software and HuggingFace models using the MLPerf methodology and CM automation: MLPerf inference v5.0 GitHub, Open MLPerf dataset at HuggingFace

- Artifact Evaluation website and unified artifact appendix adopted and extended by major CS conferences:

- Discontinued Collective Knowledge portable ML/AI solutions (2019-2021)

- Discontinued Collective Knowledge crowd-results (2015-2018)

- Discontinued Collective Mind repository (2011-2014)

- Discontinued Collective Tuning portal (2007-2011)

My key presentations and publications to help you gain insight into my projects and long-term vision:

- Invited ACM TechTalk'21: Reproducing 150 Research Papers and Testing Them in the Real World

- HPCA'25: MLPerf Power: Benchmarking the Energy Efficiency of Machine Learning Systems from Microwatts to Megawatts for Sustainable AI

- ArXiv white paper'24: Enabling more efficient and cost-effective AI/ML systems with Collective Mind, virtualized MLOps, MLPerf, Collective Knowledge Playground and reproducible optimization tournaments,

- ACM REP'23 keynote: Collective Mind: toward a common language to facilitate reproducible research and technology transfer

- Nature Machine Intelligence'23: Federated benchmarking of medical artificial intelligence with MedPerf

- Philosophical Transactions of the Royal Society'21: Collective knowledge: organizing research projects as a database of reusable components and portable workflows with common interfaces

- Quantum Collective Knowledge Hackathon at École 42 (Paris): GitHub with code and photos

- Joint presentation with Amazon at O’Reilly AI conference’18: Scaling deep learning on AWS using C5 instances with MXNet, TensorFlow, and BigDL: From the edge to the cloud

- Presentation from General Motors about my Collective Knowledge technology: Collaboratively Benchmarking and Optimizing Deep Learning Implementations

- ArXiv preprint'18: A collective knowledge workflow for collaborative research into multi-objective autotuning and machine learning techniques

- ArXiv prepring'15: Towards Performance- and Cost-Aware Software Engineering as a Natural Science

- Report of Dagstuhl Perspectives Workshop'15: Artifact Evaluation for Publications

- ACM TRUST'14 at PLDI'14: Proceedings of the 1st ACM SIGPLAN Workshop on Reproducible Research Methodologies and New Publication Models in Computer Engineering

- IJPP'11: Milepost gcc: Machine learning enabled self-tuning compiler

- MICRO'09: Portable compiler optimisation across embedded programs and microarchitectures using machine learning

- HiPEAC'09: Predictive runtime code scheduling for heterogeneous architectures

- CGO'07: Rapidly selecting good compiler optimizations using performance counters

- CGO'06: Using machine learning to focus iterative optimization

Brief summary of my current activities:

- Founder, President, and Chief Scientist of the cTuning.org — a non-profit educational organization and founding member of MLCommons developing open-source tools and methodologies to support reproducibility initiatives, artifact evaluation and open science in collaboration with ACM, IEEE and MLCommons since 2008. Please see Artifact Evaluation page for more details.

- Founder and Architect of the Collective Knowledge Playground - an educational platform for learning how to co-design software and hardware to run AI, ML and other emerging workloads efficiently and cost-effectively across diverse models, datasets, software and hardware (trading off performance, power consumption, accuracy, cost and other characteristics). CK playground leverages the MLCommons CMX workflow automation framework with virtual MLOps developed in collaboration with MLCommons, cTuning.org and other organizations. Please see ArXiv white paper and an online catalog of reusable and virtual automation recipes for MLOps and DevOps.

- Head of R&D Lab at FlexAI, coordinating efforts to leverage AI for co-designing more efficient and cost-effective AI systems.

Core technologies used: HuggingFace models and datasets, vLLM, PyTorch, Triton, TensorRT, Nsight, MLPerf, OpenSearch, MLCommons CMX, FastAPI, Docker, Bayesian search, reinforcement learning and LLMs, Nvidia and AMD GPUs. - Organizer of reproducibility initiatives and artifact evaluation for AI, ML and Systems conferences and MLPerf benchmarks in collaboration with ACM, IEEE and MLCommons since 2013. I am leading the development of a common interface and automation language to make it easier to rerun and reuse code, data and experiments from published papers - see my ACM Tech Talk'21, ACM REP'23 keynote and white paper'24 for more details.

- Member of the Program Committee at ACM Conference on Reproducibility and Replicability 2025.

- founder and co-chair of the MLCommons Task Force on Automation and Reproducibility to modularize and automate MLPerf benchmarks using my CM framework (white paper);

- author and tech.lead of the Collective Mind workflow automation framework (CM) adopted by MLCommons and the Autonomous Vehicle Computing Consortium (AVCC) to modularize MLPerf benchmarks and make it easier to run them across diverse models, data sets, software and hardware from different vendors using portable, reusable and technology-agnostic automation recipes (see online catalog of MLOps and MLPerf scripts and online docs to run MLPerf inference benchmarks). I donated this open-source technology to MLCommons to benefit everyone and continue developing it as a community effort. You can learn more about this project in this white paper. Since 2025, we split CM developments into an extended version of CM (CMX) and a simplified version of CM for MLPerf. I thank our great contributors for their feedback and support.

- vice president of MLOps at OctoML where I prototyped the first version of CM and CM4MLOps together with the cTuning foundation before donating it to MLCommons to benefit everyone;

- founder and chief architect of the virtual MLOps platform (cKnowledge.io) acquired by OctoML (now Nvidia);

- author of the Collective Knowledge technology (CK) powering cKnowledge.io;

- author of the Artifact Evaluation and Reproducibility checklist (Unified Artifact Appendix) for ACM/IEEE conferences (see example of my artifact appendix at the end of this ASPLOS'24 paper "PyTorch 2: Faster Machine Learning Through Dynamic Python Bytecode Transformation and Graph Compilation");

- co-founder of a CodeReef platform for universal MLOps with Nicolas Essayan;

- co-director of the Intel Exascale Lab and tech.lead for performance analysis, optimization and co-design of high-performance and cost-effecitve computer systems;

- senior tenured scientist at INRIA developing the foundations to co-design more efficient and cost-effective computer systems using auto-tuning, machine-learning and run-time adaptation;

- research associate at the University of Edinburgh;

- holder of the PhD in computer science from the University of Edinburgh with the Overseas Research Student Award (self-optimizing compilers, run-time systems and software/hardware co-design);

- recipient of the European technology transfer award, ACM CGO test of time award and INRIA award of scientific excellence for my original research to use AI, ML, federated learning and collective tuning (cTuning) to automate development of high-performance and cost-effective computer systems and reduce R&D costs and time to market by an order of magnitude.

Timeline:

-

Professional Career

- 2024-cur.: Head of R&D Lab at FlexAI, coordinating efforts to use AI to co-design more efficient and cost-effective AI systems.

- 2023-2024: Coordinator and developer of MLPerf automations at MLCommons, bootstrapping the development of Collective Mind automation recipes for MLOps, MLPerf, and the ABTF (Automotive Benchmarking Task Force). This was part of a collaborative engineering effort to run MLPerf Inference benchmarks across a wide range of models, datasets, software stacks, and hardware platforms from various vendors — all in a unified, reproducible, and automated way. My MLCommons Collective Mind framework (CM) with MLPerf automations was successfully validated by automating ~90% of all MLPerf Inference v4.0 performance and power submissions. It also enabled identification of top-performing and cost-efficient software/hardware configurations for AI systems from different vendors.

- 2023-cur.: Founder of the Collective Knowledge Playground - a free, open-source and technology-agnostic platform for collaborative benchmarking, optimization and comparison of AI and ML systems via open and reproducible challenges powered by my CK/CM technology. Our technology was successfully validated by the community and MLCommons members by automating, unifying and reproducing more than 80% of all MLPerf inference benchmark submissions (and 98% of power results) with very diverse technology from Neural Magic, Qualcomm, DELL, HPE, Lenovo, Hugging Face, Nvidia, AMD, Intel and Apple across diverse CPUs, GPUs and DSPs with PyTorch, ONNX, QAIC, TF/TFLite, TVM and TensorRT using popular cloud providers (GCP, AWS, Azure) and individual servers and edge devices provided by our volunteers and contributors.

- 2021-2023: Vice President at OctoAI (now Nvidia) leading the development of the 2nd generation of my open-source CK workflow automation technology (aka Collective Mind) and connecting it was TVM. Our technology was adopted by MLCommons (125+ AI software and hardware companies) to modularize AI/ML Systems and automate their development, optimization and deployment from the cloud to the edge.

- 2019-2021: Founder and developer of the cKnowledge.io platform to organize AI, ML and Systems knowledge and enable efficient computing based on FAIR principles (acquired by OctoAI (now Nvidia)).

- 2019: Founder in residence at Entrepreneur First learning how to build deep tech startups and MVPs from scratch while avoiding numerous pitfalls and minimizing all risks. This knowledge and experience helped me to meet many amazing people and create the cKnowledge.io platform acquired by OctoML.ai.

- 2015-2019: Co-founder and CTO of dividiti, a commercial engineering company based on my Collective Knowledge (CK) framework; led the company to $1M+ in revenue with Fortune 50 customers. Donated CK technology to MLCommons in 2021.

- 2016-2018: R&D project partner with General Motors (AI/ML/SW/HW co-design project).

- 2017-2018: R&D project partner with the Raspberry Pi foundation (crowd-tuning and machine learning).

- 2015-2016: Subcontractor for Google (performance autotuning and SW/HW co-design).

- 2014-2015: R&D project partner with Arm (EU H2020 TETRACOM project).

- 2012-2014: Tenured Research Scientist (associate professor) at INRIA.

- 2010-2011: Co-director of the Intel Exascale Lab (France) and a head of the software/hardware optimization and co-design group (on sabbatical from INRIA).

- 2007-2010: Guest lecturer at the University of Paris-Sud.

- 2007-2010: Tenured Research Scientist (assistant professor) at INRIA.

- 1999-2006: Research Associate at the University of Edinburgh.

- 2019: Entrepreneurs First 2nd cohort in Paris.

- 2004: PhD in computer science with the ORS award from the University of Edinburgh.

- 1999: MS in computer engineering with a golden medal (summa cum laude) from MIPT.

- 1997: BS in electronics, mathematics and machine learning (summa cum laude) from MIPT.

- 2025: MCP: Build Rich-Context AI Apps with Anthropic (DeepLearning)

- 2025: AI Agents and Agentic AI with Python & Generative AI (Coursera)

- 2025: Foundations of Project Management (Coursera/Google)

- 2024: Generative AI with Large Language Models (Coursera)

- 2024: Efficiently Serving LLMs (DeepLearning)

- 2024: Intro to Federated Learning (DeepLearning)

- 2024: Quantization Fundamentals with Hugging Face (DeepLearning)

- 2023: Learning How to Learn (Coursera)

- 2021: Improving Deep Neural Networks: Hyperparameter Tuning, Regularization and Optimization (Coursera)

- 2021: Structuring Machine Learning Projects (Coursera)

- 2021: Neural Networks and Deep Learning (Coursera)

- 2021: AI for everyone (Coursera)

- 2020: Machine Learning (Coursera)

- 2024-cur: leading the development of the next generation of my Collective Mind and Collective Knowledge technology in collaboration with MLCommons to help researchers, engineers and students co-design software and hardware for more efficient and cost-effective AI;

- 2023-2024: led the development of the Collective Knowledge playground to benchmark and optimize AI/ML Systems via reproducible optimization challenges and tournaments;

- 2022-2024: led the development of the Collective Mind automation framework (CM) to modularize AI/ML systems and make it easier to benchmark and optimize them across diverse and rapidly evolving models, data sets, software and hardware from different vendors - I donated CK and CM to MLCommons to benefit everyone and continue developing at as a community effort (see white paper);

- 2014-2019: developed the Collective Knowledge framework to automate and accelerate design space exploration of AI/ML/SW/HW stacks while balancing speed, accuracy, energy and costs;

- 2010-2011: led the development of the cTuning 2 automation framework to benchmark emerging workloads across diverse hardware at Intel Exascale Lab;

- 2007-2009: led the development of the ML-based compiler and the cTuning.org platform across 5 teams to automate and crowdsource optimization of computer systems - this technology is considered to be the first in the world;

- 2007-2009: led the development of the compiler plugin framework in collaboration with Google and Mozilla that was added to the mainline GCC powering all Linux-based computers and helped to convert production compilers into research toolsets for machine learning;

- 2024: Founder of the Collective Knowledge Playground;

- 2020: founded and developed the cKnowledge.io platform with virtual MLOps acquired by OctoML.ai (now Nvidia);

- 2019: prototyped CodeReef platform with Nicolas Essayan;

- 2019: joined Entrepreneur First, a highly selective company-building program for scientists and technologists, where I learned to build lean startups and avoid common pitfalls.

- 2025: Program Committee Member, ACM Conference on Reproducibility and Replicability 2025

- 2024: Co-organizer of the artifact evaluation at IEEE/ACM MICRO'24

- 2024: MLPerf liaison at the Student Cluster Competition at SuperComputing'24

- 2022-2024.: Founder of the MLCommons taskforce on automation and reproducibility - I donated my open-source Collective Mind technology to MLCommons to benefit everyone and continue developing it as a community effort to modularize and automate MLPerf benchmarks (see my ACM REP'23 keynote and white paper for more details).

- 2020-cur.: A founding member of MLCommons to accelerate ML and systems innovation.

- 2020-cur.: A founding member of the ACM SIG on reproducibility.

- 2019-cur.: An early member of the MLPerf.org.

- 2017-cur.: A founding member of the ACM taskforce on reproducibility.

- 2015-cur.: Author of the unified artifact appendix and reproducibility checklist now used and extended by many ACM and IEE conferences;

- 2015-cur.: co-organizer of many reproducible optimization tournaments and hackathons to co-design efficient AI and ML systems powered by my CK&CM technology;

- 2014-2016: co-author of the ACM artifact review and badging policy.

- 2014-cur.: helped to reproduce 150+ research papers from ML and systems conferences;

- 2014-cur.: Introduced reproducibility initiatives and artifact checklists at MLSys, ASPLOS, MICRO, CGO, PPoPP and other ML and systems conferences to validate results from published papers (see white paper and ACM TechTalk for more details);

- 2014-cur.: Founder and president of the cTuning foundation, France.

- 2008-cur.: Founder and the architect of cTuning.org.

- 1999-cur.: Evangelist of collaborative and reproducible research and experimentation in computer engineering.

- I prepared the foundations to combine machine learning, autotuning, knowledge sharing and federated learning to automate and accelerate the development of efficient software and hardware by several orders of magnitude (Google scholar);

- developed Collective Knowledge and Collective Mind technology and started educational initiatives with ACM, IEEE, HiPEAC, Raspberry Pi foundation and MLCommons to bring my research and expertise to the real world to benefit everyone;

- prepared and tought M.S. course at the Paris-Saclay University on using ML to co-design efficient software and hardare (self-optimizing computing systems);

- gave 100+ invited talks about my R&D;

- honored to receive the ACM CGO test of time award, several best papers awards, INRIA award of scientific excellence and EU HiPEAC technology transfer award.

- 2017: ACM CGO test of time award for my research on ML-based self-optimizing compilers.

- 2016-cur.: Microsoft Azure Research award to support cKnowledge.org.

- 2015: European technology transfer award for my Collective Knowledge automation technology.

- 2012: INRIA scientific excellence award and personal fellowship.

- 2010: HiPEAC award for PLDI paper.

- 2009: HiPEAC award for MICRO paper.

- 2006: CGO best paper award.

- 2000: Overseas research student award for my Ph.D.

- Founding Member, MLCommons (founding member)

- Reproducibility Champion, ACM

- Member, IEEE Computer

- Member, HiPEAC

- 2023-cur.:

Developed a prototype of the Collective Knowledge playground

to collaboratively benchmark and optimize AI, ML and other emerging applications

in an automated and reproducible way via open challenges - see white paper

for more details.

I used MLPerf benchmarks; PyTorch; my CK and CM automation technology; Python; Streamlit; .

- 2022-2024.:

Prototyped the Collective Mind automation framework

using virtual MLOps scripts and MLPerf automations to run MLPerf and other benchmarks and workloads

in a unified and automated manner across diverse models, datasets, software and hardware

from different vendors

I used Python; loadgen; Docker/Podman; HuggingFace/Transformers/PyTorch/ONNX/TF; Ubuntu/Windows/RHEL/MacOS; Nvidia/Intel/AMD/Qualcomm/Arm ; AWS/Azure/Scaleway .

- 2020-cur.:

Developed a prototype of the cKnowledge.io to organize all knowledge

about AI, ML, systems, and other innovative technology from my academic and industrial partners

in the form of portable CK workflows, automation actions, and reusable artifacts.

I use it to automate co-design and comparison of efficient AI/Ml/SW/HW stacks

from data centers and supercomputers to mobile phones and edge devices

in terms of speed, accuracy, energy, and various costs.

I also use this platform to help organizations reproduce innovative AI, ML, and systems techniques from research papers

and accelerate their adoption in production.

I collaborate with MLPerf.org to automate and simplify ML&systems benchmarking

and fair comparison based on the CK concept and DevOps/MLOps principles.

I used the following technologies: Linux/Windows/Android; Python/JavaScript/CK; apache2; flask/django; ElasticSearch; GitHub/GitLab/BitBucket; REST JSON API; Travis CI/AppVeyor CI; DevOps; CK-based knowledge graph database; TensorFlow; Azure/AWS/Google cloud/IBM cloud .

- 2018-cur.:

Enhanced and stabilized all main CK components

(software detection, package installation, benchmarking pipeline, autotuning, reproducible experiments, visualization)

successfully used by dividiti to automate MLPerf benchmark submissions.

I used the following technologies: Linux/Windows/Android; CK/Python/JavaScript/C/C++; statistical analysis; MatPlotLib/numpy/pandas/jupyter notebooks; GCC/LLVM; TensorFlow/PyTorch; Main AI algorithms, models and data sets for image detection and object classification; Azure/AWS/Google cloud/IBM cloud; mobile phones/edge devices/servers; Nvidia GPU/EdgeTPU/x86/Arm architectures .

- 2017-2018:

Developed CK workflows

and live dashboards for

the 1st open ACM REQUEST tournament

to co-design Pareto-efficient SW/HW stacks for ML and AI in terms of speed, accuracy, energy, and costs.

We later reused this CK functionality to automate MLPerf submissions.

I used the following technologies: CK; LLVM/GCC/iCC; ImageNet; MobileNets, ResNet-18, ResNet-50, Inception-v3, VGG16, SSD, and AlexNet; MXNet, TensorFlow, Caffe, Keras, Arm Compute Library, cuDNN, TVM, and NNVM; Xilinx Pynq-Z1 FPGA/Arm Cortex CPUs/Arm Mali GPGPUs (Linaro HiKey960 and T-Firefly RK3399)/a farm of Raspberry Pi devices/NVIDIA Jetson TX2/Intel Xeon servers in Amazon Web Services, Google Cloud and Microsoft Azure .

- 2017-2018:

Developed an example of the autogenerated and reproducible paper

with a Collective Knowledge workflow for collaborative research into multi-objective autotuning and machine learning techniques

(collaboration with the Raspberry Pi foundation).

I used the following technologies: Linux/Windows; LLVM/GCC; CK; C/C++/Fortran; MILEPOST GCC code features/hardware counters; DNN (TensorFlow)/KNN/SVM/decision trees; PCA; statistical analysis; crowd-benchmarking; crowd-tuning .

- 2015-cur.:

Developed the Collective Knowledge framework (CK)

to help the community

automate typical tasks in ML&systems R&D,

provide a common format, APIs, and meta descriptions for shared research projects,

enable portable workflows,

and improve the reproducibility and reusability in computational research.

We now use it to automate benchmarking, optimization and co-design of AI/ML/SW/HW stacks

in terms of speed, accuracy, energy and other costs across diverse platforms

from data centers to edge devices.

I used the following technologies: Linux/Windows/Android/Edge devices; Python/C/C++/Java; ICC/GCC/LLVM; JSON/REST API; DevOps; plugins; apache2; Azure cloud; client/server architecture; noSQL database (ElasticSearch); GitHub/GitLab/BitBucket; Travis CI/AppVeyor CI; main math libraries, DNN frameworks, models, and datasets .

- 2012-2014: Prototyped the Collective Mind framework - prequel to CK. I focused on web services but it turned out that my users wanted basic CLI-based framework. This feedback motivated me to develop a simple CLI-based CK framework.

- 2010-2011: Helped to create KDataSets (1000 data sets for CPU benchmarks) (PLDI paper, repo).

- 2008-2010:

Developed the Machine learning based self-optimizing compiler connected with cTuning.org

in collaboration with IBM, Arc (Synopsys), Inria, and the University of Edinburgh. This technology is considered to be

the first in the world;

I used the following technologies: Linux; GCC; C/C++/Fortran/Prolog; semantic features/hardware counters; KNN/decision trees; PCA; statistical analysis; crowd-benchmarking; crowd-tuning; plugins; client/server architecture .

- 2008-2009: Added the function cloning process to GCC to enable run-time adaptation for statically-compiled programs (report).

- 2008-2009: Developed the interactive compilation interface now available in mainline GCC (collaboration with Google and Mozilla).

- 2008-cur.:

Developed the cTuning.org portal

to crowdsource training of ML-based MILEPOST compiler

and automate SW/HW co-design similar to SETI@home. See press-releases from IBM

and Fujitsu about my cTuning concept.

I used the following technologies: Linux/Windows; MediaWiki; MySQL; C/C++/Fortran/Java; MILEPOST GCC; PHP; apache2; client/server architecture; KNN/SVM/decision trees; plugins .

- 2009-2010: Created cBench (collaborative CPU benchmark to support autotuning R&D) and connected it with my cTuning infrastructure from the MILEPOST project.

- 2005-2009: Created MiDataSets - multiple datasets for MiBench (20+ datasets per benchmark; 400 in total) to support autotuning R&D.

- 1999-2004:

Developed a collaborative infrastructure to autotune HPC workloads (Edinburgh Optimization Software) for the EU MHAOTEU project.

I used the following technologies: Linux/Windows; Java/C/C++/Fortran; Java-based GUI; client/server infrastructure with plugins to integrate autotuning/benchmarking tools and techniques from other partners .

- 1999-2001:

Developed a polyhedral source-to-source compiler for memory hierarchy optimization in HPC used in the EU MHAOTEU project.

I used the following technologies: C++; GCC/SUIF/POLARIS .

- 1998-1999:

Developed a web-based service to automate the submission and execution of tasks to supercomputers via Internet used in the Russian Academy of Sciences.

I used the following technologies: Linux/Windows; apache/IIS; MySQL; C/C++/Fortran/Visual Basic; MPI; Cray T3D .

- 1993-1998:

Developed an analog semiconductor neural network accelerator (Hopfield architecture).

My R&D tasks included the NN design, simulation, development of an electronic board connected with a PC to experiment with semiconductor NN, data set preparation, training, benchmarking, and optimization of this NN.

I used the following technologies: MS-DOS/Windows/Linux; C/C++/assembler for NN implementation; MPI for distributed training; PSpice for electronic circuit simulation; ADC, DAC, and LPT to measure semiconductor NN and communicate with a PC; Visual Basic to visualize experiments .

- 1991-1993:

Developed and sold software to automate financial operations in SMEs.

I used the following technologies: MS-DOS; Turbo C/C++; assembler for printer/video drivers; my own library for Windows management .

-

I am passionate about lifelong learning and regularly take Coursera and other online courses

to acquire new skills or refresh existing knowledge: www.linkedin.com/in/grigorifursin/details/certifications

-

Developed foundational methodologies and tools for the automatic co-design

of software and hardware from diverse vendors, enabling efficient

execution of emerging workloads with optimal speed, accuracy, energy, and

cost—leveraging machine learning, crowd-tuning, and crowd-learning. This

work anticipated advances in AutoML, workflow automation, agent-based

optimization, and federated learning.