Join open MLCommons task force on automation and reproducibility

to participate in the collaborative development of the Collective Knowledge v3 playground (MLCommons CK)

powered by the Collective Mind automation language (MLCommons CM)

and Modular Inference Library (MIL)

to automate benchmarking, optimization, design space exploration and deployment of Pareto-efficient AI/ML applications

across any software and hardware from the cloud to the edge!

- 2023 June 1: Follow further news from our MLCommons task force on automation and reproducibility here.

- 2023 Feb 16: New alpha CK2 GUI to visualize all MLPerf results is available here.

- 2023 Jan 30: New alpha CK2 GUI to run MLPerf inference is available here.

- 2022 November: We are very excited to see that our new CK2 automation meta-framework (CM) was successfully used at the Student Cluster Competition'22 to make it easier to prepare and run the MLPerf inference benchmark just under 1 hour. If you have 20 minutes, please check this tutorial to reproduce results yourself ;) !

- 2022 September: We have released MLCommons CM v1.0.1 - the next generation of the MLCommons Collective Knowledge framework being developed by the public workgroup. We are very glad to see that more than 80% of all performance results and more than 95% of all power results were automated by the MLCommons CK v2.6.1 in the latest MLPerf inference round thanks to submissions from Qualcomm, Krai, Dell, HPE and Lenovo!

- 2022 July: We have pre-released CK2(CM) portable automation scripts for MLOps and DevOps: github.com/mlcommons/ck/tree/master/cm-mlops/script.

- 2022 March: We started developing the 2nd generation of the CK framework (aka CM) based on the community feedback - join our collaborative effort!

- 2022 February: We've helped with artifact evaluation at ASPLOS'22!

- 2021 September: We are excited to announce that we've donated our CK framework and the MLPerf inference automation suite v2.5.8 to MLCommons (github.com/mlcommons/ck and github.com/mlcommons/ck-mlops) to benefit everyone! .

- 2021 June: New YouTube video from our partner: "What is OctoML?".

- 2021 June: CK v2.5.4 with many enhancements to pack ML models and automate MLPerf(tm) inference benchmark.

- 2021 April: We are very excited to join forces with OctoML! Contact Grigori Fursin for more details!

- 2021 April: We have released CK v2.x under Apache 2.0 license based on the feedback from our users.

- 2021 March: See our ACM TechTalk about "reproducing 150 Research Papers and Testing Them in the Real World" on the ACM YouTube channel.

- 2021 March: The report from the "Workflows Community Summit: Bringing the Scientific Workflows Community Together" is available in ArXiv.

- 2021 March: We have published overview of the CK techology in the Philosophical Transactions A, the world's longest-running journal where Newton published: DOI, ArXiv.

- 2021 February 11: See the announcement for our ACM TechTalk about reproducing 150 ML and Systems papers and introducing the CK technology.

- 2020 December: The cTuning foundation is honored to join MLCommons as a founding member to accelerate machine learning innovation along with 50+ leading companies and universities.

- 2020 November: We collected all portable AI and ML workflows in one GitHub repository and this adaptive CK Docker container as suggested by our users.

- 2020 October: CK helped to automate and reproduce many MLPerf benchmark v0.7 inference submissions. See CK solutions for open division & edge devices.

- 2020 September: The Reddit discussion about our painful experience reproducing 150 ML and Systems papers.

Windows/Linux/MacOS/Android:

Windows/Linux/MacOS/Android:

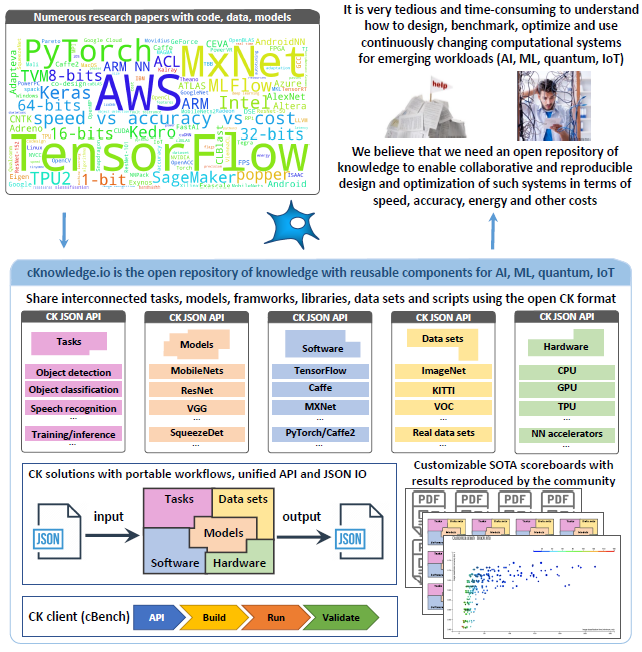

CK framework is basically a common playground that connects researchers and practitioners

to learn how to collaboratively design, benchmark, optimize and validate innovative computational technology

including self-optimizing and bio-inspired computing systems.

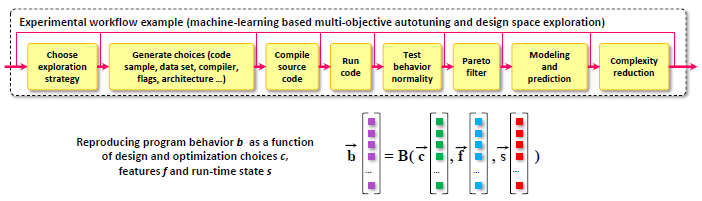

Our mission is to organize and systematize all our AI, ML and systems knowledge

in the form of portable workflow, automation actions and reusable artifacts

using our open CK platform

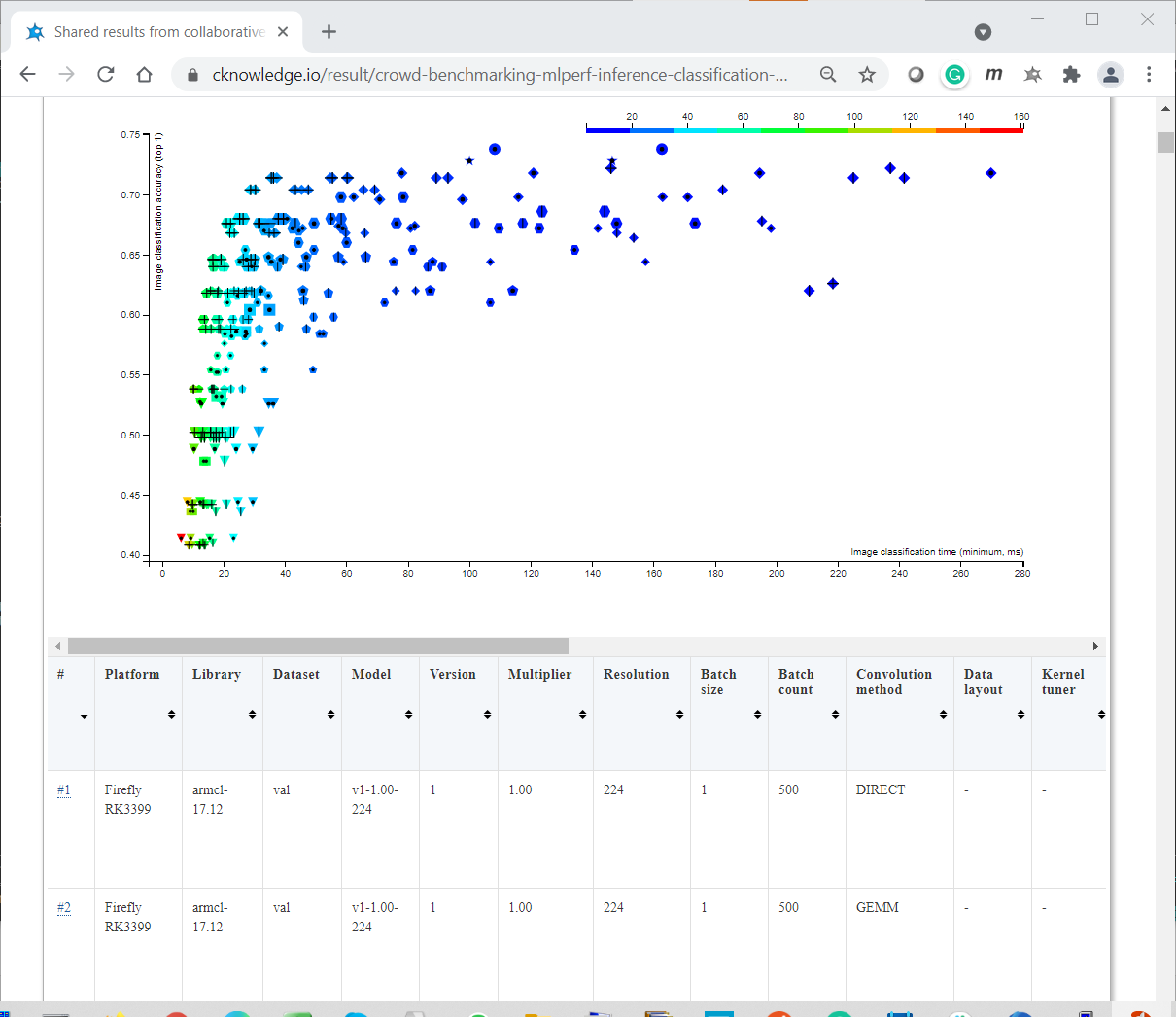

with reproducible papers

and live SOTA scoreboards for crowd-benchmarking.

We continue using CK to support related initiatives including MLPerf, PapersWithCode,

ACM artifact review and badging,

and artfact evaluation.

CK framework is basically a common playground that connects researchers and practitioners

to learn how to collaboratively design, benchmark, optimize and validate innovative computational technology

including self-optimizing and bio-inspired computing systems.

Our mission is to organize and systematize all our AI, ML and systems knowledge

in the form of portable workflow, automation actions and reusable artifacts

using our open CK platform

with reproducible papers

and live SOTA scoreboards for crowd-benchmarking.

We continue using CK to support related initiatives including MLPerf, PapersWithCode,

ACM artifact review and badging,

and artfact evaluation.